A Cloud Architect's Guide to the AI Era

I'm a cloud architect by trade. I have been designing and implementing cloud solutions for over 7 years now. I've gone through the evolution of initial cloud adoption, then the rise of containerization and microservices, then the serverless wave, and now the AI era. Each of these waves has brought new opportunities and challenges for cloud architects.

In each phase, it was interesting to see what different skills and mindsets I had to adopt to be successful.

- In the cloud adoption phase, convincing both technical and non-technical stakeholders of the benefits of cloud was key.

- In the containerization and microservices phase, understanding how to design systems and what software architecture patterns work well with them was the hard part.

- The serverless wave was the most fun for me. You get new toys, with a lot of functionalities and none of the boring operational overhead. The challenge was to understand the solution specific trade-offs and how to design systems that are cost-effective, scalable, and maintainable.

And now, here we are in the AI era, with its opportunities and challenges. The big question is, what does this mean for cloud architects?

New opportunities

There is no doubt that AI unlocks new opportunities for organizations both big and small. AI is part of both large-scale company initiatives, but where it has already made the most impact is on the individual. People adopt AI into their existing workflows, substituting repetitive and one-off tasks. New tools see adoption just by word of mouth. I'm going to skip over the data security aspect of this for now. (But don't think for a second that this is not an important topic.)

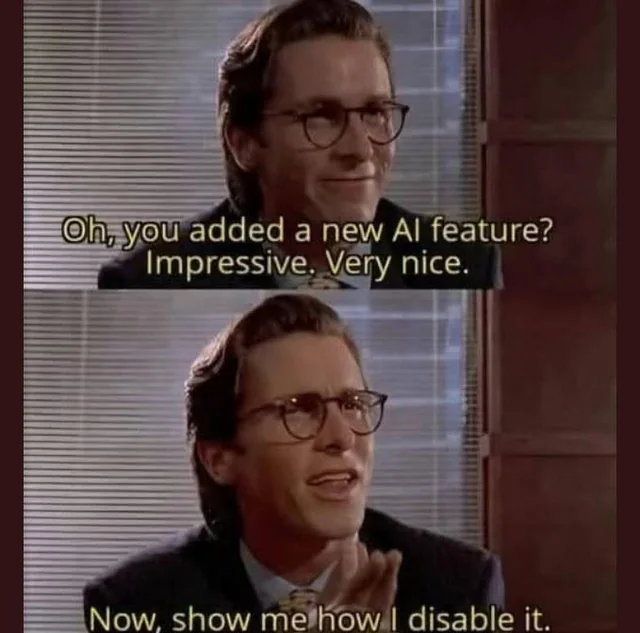

At a corporate level, leadership faces a fundamental strategic crossroads with AI, with every decision weighed by commercial and governance pressures. The first path is to turn AI inward, deploying it to optimize internal operations and create a more efficient, data-driven organization. The second path is to turn AI outward, embedding it into customer-facing products to create new revenue streams or build a competitive edge. The debate over which path yields a better return is ongoing, but my perspective is that the safer, more sustainable starting point is internal. AI is a technology that demands experimentation, and it's wiser to work through its challenges on your own processes before exposing your customers to them. I say that as a user of products with half-baked AI features that frustrate me more than I care to admit. 😅

On the other hand, the extraordinary boom in AI startups (both developing and incorporating the tech) shows that people are not sitting idle and have ideas on how to put it into practice. I personally do prefer that tools I'm already accustomed to using do not reimagine their entire product just to stuff a new AI feature in there. By the same principle, I think that companies that implement AI in the polishing of their current offering work better than just slapping a generative feature on.

The real opportunity: the internal flywheel

For established businesses, the most effective approach is to integrate AI to augment existing workflows. Instead of building a novel, greenfield product, you identify a well-functioning business process and use AI as a superpower to improve its outcome and its overall efficiency.

This internal-first strategy is self-contained. It doesn't need to handle the chaos of diverse, unregulated user input, making it far less risky and easier to implement than user facing changes. More importantly, it can create a powerful flywheel effect: a better process generates better data, which in turn trains a better model, further improving the process. It’s a feedback loop that requires relatively little maintenance once established.

You don't even need to build something from scratch. A great starting point is to analyze your existing data pipelines (whether they use traditional algorithms or older ML models) and see if they can be enhanced. Why take the risk of developing a completely new product when there is untapped optimization potential in a system that already works?

Let's use a concrete, hypothetical example. You run an e-commerce clothing store and want to increase the average basket size. The traditional approach is a standard product recommendation engine. The hyped-up AI approach might be to build an AR "try-on" feature or a personalized generative AI shopping assistant.

A more pragmatic, high-impact approach lies in the middle. You could generate rich embeddings from a combination of product images, descriptions, and user profile data. With these higher-quality embeddings, you could instantly improve your existing recommendation engine or create novel "vibe-based" filters for your customers (e.g., "cozy autumn evening" or "minimalist beach trip"). The uplift from these low-hanging-fruit projects is often significant.

Don't you just want to click them? 🙃

My point is this: you don't have to reinvent your business. You win by using AI to become exceptionally good at what you already do. And let's be honest, none of these features will likely provide as much immediate uplift as simply implementing Klarna or AfterPay. 😉

Once a company commits to integrating AI into a core offering, it must decide how deep that integration should go. This brings us directly to the first major challenge.

New challenges

Interoperability

The power of your AI features is dependent on the level of integration with your existing technologies.

Startups are at an advantage as they do not need to face any challenge with interoperability with an existing stack. They can build their architecture around AI from day one.

Established companies face a much tougher concern. The effort required versus the payoff of a deep integration plays a huge role in their strategy. This is precisely why most companies, at least in this first wave of deployment, have opted for an "AI as an add-on" strategy. The AI is not part of the core product; it sits closer to the user, often as a chatbot or a "magic" text box. It's typically only capable of performing tasks already available through the UI, acting as a natural language wrapper around existing backend functionality. This is a safe bet, but it often doesn't improve the user experience by a revolutionary margin. It makes sense if we assume the product's UI and UX are already optimized enough that a user feels confident and productive.

Deeper integration often ends up reimagining the product from its core. This is where things get architecturally complex. It's not just about calling a new API; it's about re-thinking data flows, service boundaries, and user interactions. This path is extremely difficult and comes with an increasing set of further challenges and a long implementation time to address them.

Security

As deeper integrations are put on the roadmap, crucial security considerations suddenly become primary concerns. From my perspective, these stem from three main sources:

- Breaking traditional isolation boundaries: For decades, we've built systems with clear separation: a front-end for presentation, a back-end for logic, and a database for storage. An advanced AI agent might need to orchestrate calls across all these layers in novel, user-driven ways. This blurs the lines, creating a much larger attack surface. We're no longer just securing predictable API endpoints; we're trying to secure a system against unpredictable, language-based instructions.

- The stochastic nature of AI: LLMs are not deterministic. You can ask the same question twice and get two different answers. This unpredictability is a nightmare from a security standpoint. We have to defend against "hallucinations," where the model confidently makes things up. "Jailbreaking" or prompt injection, where a malicious user tricks the model into bypassing its safety protocols or revealing sensitive information, is also a huge challenge to mitigate. As an architect, you have to design new validation layers and "guardrails" for outputs you can't fully predict.

- The data you touch: To perform higher-order functions, an AI agent needs access to a broad range of data. The principle of least privilege becomes incredibly difficult to enforce. How do you grant an AI just enough access to be useful for one user's request without accidentally giving it the keys to the entire kingdom for another? Designing strict and context-aware data access policies is a massive architectural challenge.

Cost and performance

Then there's the question we eventually must address: the sheer cost of running these models. Every call to a state-of-the-art model costs money. This is a fundamental shift from a predictable infrastructure cost to a variable cost that's tied directly to user engagement. The big question is, who absorbs this cost? Is it passed directly to customers as a premium feature? Is it bundled into the existing subscription? And most importantly, is the feature valuable enough that customers are willing to pay for it?

From an architect's chair, this is tied directly to performance. The "smarter" and more capable models are, without exception, slower and more expensive. Do you opt for a faster, cheaper model that might not be as helpful, or the slower, more expensive one that provides a better user experience? This trade-off impacts everything from system responsiveness to the monthly cloud bill. We have to design for latency, perhaps with streaming responses or background processing, while keeping a close eye on the cost-per-user.

Reliance on third-party APIs in core business

Most companies aren't building their own foundation models. They're relying on APIs from a handful of large providers. This introduces a significant business risk. Is it wise to build a core feature of your product on a service you don't control? What happens if your provider suddenly 10x their prices, changes their model's behavior in a way that breaks your features, or deprecates the version you rely on? You could get "rug-pulled" with very little notice. Furthermore, their reliability becomes your reliability. When their API is down, your feature is down, and it's your customer support team that faces the complaints.

As an architect, you have to plan for this, because it's not uncommon to see multi-day sporadic failures from even the largest providers. This means our patterns for failure handling must be top-notch, incorporating sophisticated retry logic and queuing systems to handle API outages or rate limits. It also forces crucial architectural decisions between batch processing for offline tasks (like nightly reports) and streaming for real-time interactions (like a chatbot), as each demands fundamentally different infrastructure and design patterns. The alternative to all this is self-hosting, but that comes with its own mountain of cost and complexity.

Writing and shipping code

The tooling and ways of working are also rapidly changing. Most teams are writing and shipping AI-written code from tools like Copilot. It increases development speed, but you must have a strategy to review it for security and maintainability. Also think about testing and deployment. How does it change our CI/CD pipelines?

The enterprise perspective

For large enterprises, all the challenges above are magnified. Governance becomes a primary concern. How do you ensure that proprietary company data sent to a third-party AI isn't used for training? How do you audit the decisions or suggestions made by an AI? The risk of a data breach, whether through a sophisticated attack or a simple misconfiguration, is enormous. Add to this the pressure from shareholders and the commercial need to show a return on a very expensive AI investment, and the stakes become incredibly high.

Where it takes us

So, where does this leave us, the cloud architects navigating this new era?

As we've seen, the opportunity for AI adoption is huge, both to supercharge internal processes and to create brand new products. But the path forward is full of non-trivial challenges in security, cost, and reliability. Getting them wrong isn't just a technical failure; it can be incredibly costly to the business.

Luckily, this weight doesn't fall entirely on the shoulders of a cloud architect. Solving these problems is a team effort. It means working closely together with talented colleagues from ML, data, security, and product engineering. The hype and the challenges are both a shared responsibility.

In a team like this, the architect’s job is to connect the dots. Our core skill has always been understanding how everything fits together: the product, the governance rules, the budget, and the overall business goals. That skill is more important than ever with AI. We’re the ones who have to ask the tough questions:

- How does integrating this third-party AI service affect our overall system resilience and what is our fallback plan?

- What new security vulnerabilities do we open up by giving an AI agent access to our internal APIs, and how do we mitigate them?

- How do we build something that users love, that's fast, and commercially viable at scale?

Just as we did with the moves to cloud, containers, and serverless, our job is to keep things grounded. We need to stay curious, keep learning, and always be on the lookout for what could go wrong. Most importantly, we have to make sure that the exciting technical work we're doing is practical and actually makes good business sense.

For me, this feels familiar, just in a new and exciting field. I’m enjoying the challenge of working on these AI projects and can't wait to see what problems we get to solve next. The fundamentals of our job haven't changed: we still build systems that are strong, safe, and affordable. Now, we just have powerful new tools to work with, and the real challenge is learning to use them well. 🚧 🏗️ 🚧